Details

-

Type:

Bug

Bug

-

Status:

Resolved

Resolved

-

Priority:

Major

Major

-

Resolution: Fixed

-

Affects Version/s: 5.0.0

-

Fix Version/s: 5.0.1

-

Component/s: Topology/network

-

Security Level: Public

-

- Environment:

- -

Issue Links

| Depends | ||||||

|---|---|---|---|---|---|---|

|

|

|||||

Activity

| Field | Original Value | New Value |

|---|---|---|

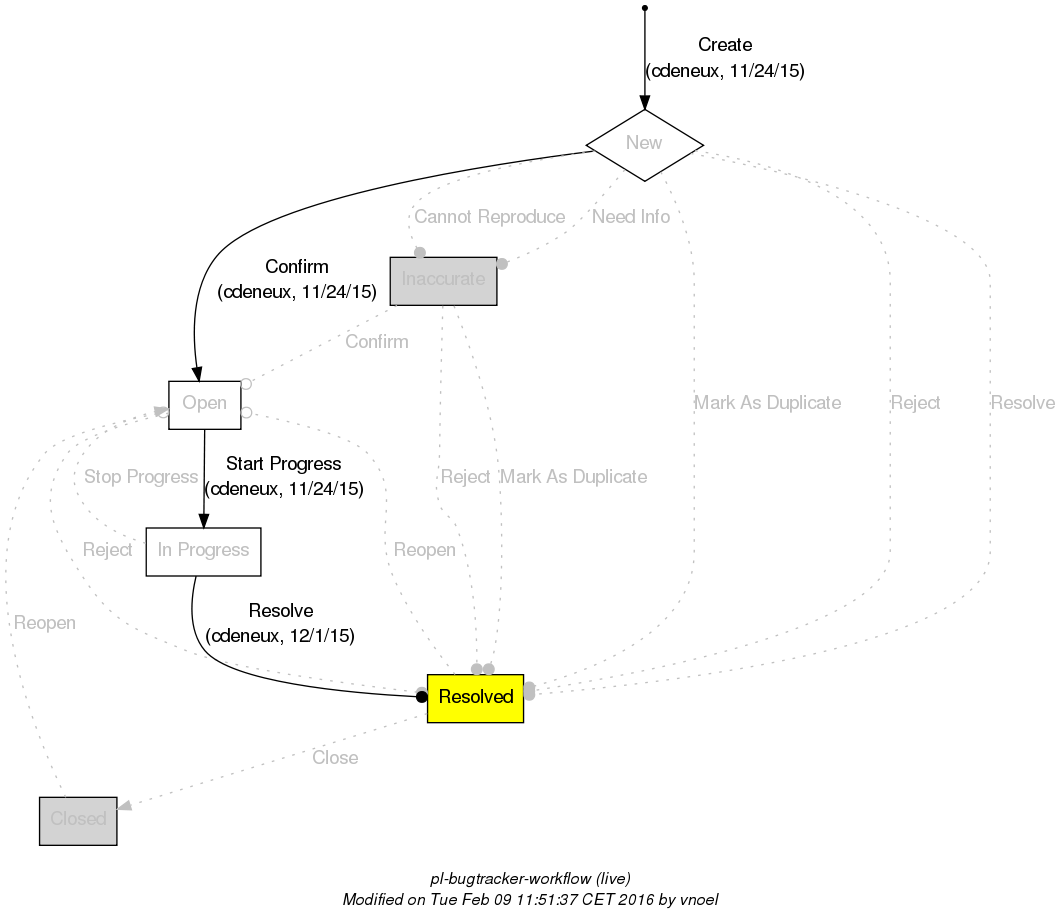

| Status | New [ 10000 ] | Open [ 10002 ] |

| Priority | Major [ 3 ] |

| Status | Open [ 10002 ] | In Progress [ 10003 ] |

| Fix Version/s | 5.0.1 [ 10579 ] |

| Link |

This issue blocks |

| Link |

This issue depends on |

| Link |

This issue depends on |

| Description |

From a container, I invoke a service located on the same container. The service invocation succeeds.

When I attach this container to another topology, I get the following error that should not occur as it is a local invocation: {code} RMIComponentContext_CLI_moving_new_container_to_an_existing_topology_initial-container-0, iteration #8, [consumer]: Test to invoke a local service on the container 'initial-container-0' RMIComponentContext_CLI_moving_new_container_to_an_existing_topology_initial-container-0, iteration #8: Running test 'Invoke a local service on the container 'initial-container-0'' on operation '{http://petals.ow2.org/}hello' with mep 'IN_OUT' javax.jbi.messaging.MessagingException: org.ow2.petals.microkernel.api.jbi.messaging.RoutingException: No endpoint(s) matching the target service '{http://petals.ow2.org/}HelloService' for Message Exchange with id 'petals:uid:2b23a290-929b-11e5-a27f-0090f5fbc4a1' at org.ow2.petals.microkernel.jbi.messaging.exchange.DeliveryChannelImpl.sendExchange(DeliveryChannelImpl.java:406) at org.ow2.petals.microkernel.jbi.messaging.exchange.DeliveryChannelImpl.send(DeliveryChannelImpl.java:172) at org.objectweb.petals.tools.rmi.server.remote.implementations.RemoteDeliveryChannelImpl.send(RemoteDeliveryChannelImpl.java:328) at sun.reflect.GeneratedMethodAccessor33.invoke(Unknown Source) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at sun.rmi.server.UnicastServerRef.dispatch(UnicastServerRef.java:322) at sun.rmi.transport.Transport$2.run(Transport.java:202) at sun.rmi.transport.Transport$2.run(Transport.java:199) at java.security.AccessController.doPrivileged(Native Method) at sun.rmi.transport.Transport.serviceCall(Transport.java:198) at sun.rmi.transport.tcp.TCPTransport.handleMessages(TCPTransport.java:567) at sun.rmi.transport.tcp.TCPTransport$ConnectionHandler.run0(TCPTransport.java:828) at sun.rmi.transport.tcp.TCPTransport$ConnectionHandler.access$400(TCPTransport.java:619) at sun.rmi.transport.tcp.TCPTransport$ConnectionHandler$1.run(TCPTransport.java:684) at sun.rmi.transport.tcp.TCPTransport$ConnectionHandler$1.run(TCPTransport.java:681) at java.security.AccessController.doPrivileged(Native Method) at sun.rmi.transport.tcp.TCPTransport$ConnectionHandler.run(TCPTransport.java:681) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615) at java.lang.Thread.run(Thread.java:745) at sun.rmi.transport.StreamRemoteCall.exceptionReceivedFromServer(StreamRemoteCall.java:275) at sun.rmi.transport.StreamRemoteCall.executeCall(StreamRemoteCall.java:252) at sun.rmi.server.UnicastRef.invoke(UnicastRef.java:161) at java.rmi.server.RemoteObjectInvocationHandler.invokeRemoteMethod(RemoteObjectInvocationHandler.java:227) at java.rmi.server.RemoteObjectInvocationHandler.invoke(RemoteObjectInvocationHandler.java:179) at com.sun.proxy.$Proxy15.send(Unknown Source) at com.ebmwebsoucing.integration.client.rmi.RMIClient.runConsumerIntegration(RMIClient.java:425) at com.ebmwebsoucing.integration.client.rmi.RMIClient.main(RMIClient.java:259) {code} |

From a container, I invoke a service located on the same container. The service invocation succeeds.

When I attach this container to another topology *concurrently to service invocations*, I get the following error that should not occur as it is a local invocation: {code} RMIComponentContext_CLI_moving_new_container_to_an_existing_topology_initial-container-0, iteration #8, [consumer]: Test to invoke a local service on the container 'initial-container-0' RMIComponentContext_CLI_moving_new_container_to_an_existing_topology_initial-container-0, iteration #8: Running test 'Invoke a local service on the container 'initial-container-0'' on operation '{http://petals.ow2.org/}hello' with mep 'IN_OUT' javax.jbi.messaging.MessagingException: org.ow2.petals.microkernel.api.jbi.messaging.RoutingException: No endpoint(s) matching the target service '{http://petals.ow2.org/}HelloService' for Message Exchange with id 'petals:uid:2b23a290-929b-11e5-a27f-0090f5fbc4a1' at org.ow2.petals.microkernel.jbi.messaging.exchange.DeliveryChannelImpl.sendExchange(DeliveryChannelImpl.java:406) at org.ow2.petals.microkernel.jbi.messaging.exchange.DeliveryChannelImpl.send(DeliveryChannelImpl.java:172) at org.objectweb.petals.tools.rmi.server.remote.implementations.RemoteDeliveryChannelImpl.send(RemoteDeliveryChannelImpl.java:328) at sun.reflect.GeneratedMethodAccessor33.invoke(Unknown Source) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at sun.rmi.server.UnicastServerRef.dispatch(UnicastServerRef.java:322) at sun.rmi.transport.Transport$2.run(Transport.java:202) at sun.rmi.transport.Transport$2.run(Transport.java:199) at java.security.AccessController.doPrivileged(Native Method) at sun.rmi.transport.Transport.serviceCall(Transport.java:198) at sun.rmi.transport.tcp.TCPTransport.handleMessages(TCPTransport.java:567) at sun.rmi.transport.tcp.TCPTransport$ConnectionHandler.run0(TCPTransport.java:828) at sun.rmi.transport.tcp.TCPTransport$ConnectionHandler.access$400(TCPTransport.java:619) at sun.rmi.transport.tcp.TCPTransport$ConnectionHandler$1.run(TCPTransport.java:684) at sun.rmi.transport.tcp.TCPTransport$ConnectionHandler$1.run(TCPTransport.java:681) at java.security.AccessController.doPrivileged(Native Method) at sun.rmi.transport.tcp.TCPTransport$ConnectionHandler.run(TCPTransport.java:681) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615) at java.lang.Thread.run(Thread.java:745) at sun.rmi.transport.StreamRemoteCall.exceptionReceivedFromServer(StreamRemoteCall.java:275) at sun.rmi.transport.StreamRemoteCall.executeCall(StreamRemoteCall.java:252) at sun.rmi.server.UnicastRef.invoke(UnicastRef.java:161) at java.rmi.server.RemoteObjectInvocationHandler.invokeRemoteMethod(RemoteObjectInvocationHandler.java:227) at java.rmi.server.RemoteObjectInvocationHandler.invoke(RemoteObjectInvocationHandler.java:179) at com.sun.proxy.$Proxy15.send(Unknown Source) at com.ebmwebsoucing.integration.client.rmi.RMIClient.runConsumerIntegration(RMIClient.java:425) at com.ebmwebsoucing.integration.client.rmi.RMIClient.main(RMIClient.java:259) {code} |

| Status | In Progress [ 10003 ] | Resolved [ 10004 ] |

| Resolution | Fixed [ 1 ] |

| Transition | Status Change Time | Execution Times | Last Executer | Last Execution Date | |||||||||

|

|

|

|

|

|||||||||

|

|

|

|

|

|||||||||

|

|

|

|

|

When stopping the Fractal components associated to the JBI artefacts, the JBI artefacts are stopped without persisting the state STOPPED, but endpoints are deactivated.

As these Fractal components are stopped before the router, it exists a little moment where we can get the error mentioned in the issue summary.

It has no sens to stop JBI artefacts and their associated Fractal components:

PETALSESBCONT-358has been reopened.So, the right process to block service invocations is something like:

PETALSESBCONT-368).- the external listeners of JBI components must always accept incoming request during container attachment/detachment

- just incoming message exchanges at NMR level must be blocked during the attachment/detachment process, for example at router entry point

- to migrate registry data from a registry to the other one, it is sufficient to make the migration when the router is stopped. That's why

So, the right process to block service invocations is something like:PETALSESBCONT-358has been reopened.PETALSESBCONT-368).